In the near future, we might encounter beings strolling down the streets, offering smiles and greetings, yet lacking the spark of life. These entities possess living human-like tissues and eyes that see, but they are not human. They empathize with our concerns, yet harbor no fears of their own. Behind their gentle smiles lies a colossal artificial brain composed of billions of transistors, while metallic plates and wires reside beneath their skin. These humanoid robots embody the concept of "rebuilding robots" through a Darwinian biological approach.

As technological advancements accelerate, humanoid robots emerge as innovations that redefine the deepening relationship between humans and machines. Far from being mere mechanical tools, these robots are designed to mimic human structure and behavior. They represent a new framework enabling more advanced and profound interactions between technology and human society. Breakthroughs in manufacturing such robots are expected to reshape the relationship between humans and artificial intelligence, paving the way for unprecedented social interactions between the two.

Pioneering Initiatives

In recent years, several initiatives have emerged with the goal of developing humanoid robots. Tesla's Optimus robot, expected to be available by 2025, and Figure's robots, already present in some markets, are among these groundbreaking projects. Moreover, a partnership between Nvidia and Taiwanese companies signals the beginning of mass production for humanoid robots, ushering in the era of the "everyday robot." These advanced machines are designed to perform routine daily tasks typically undertaken by humans, revolutionizing our approach to automation in daily life. The following details highlight some of these innovative initiatives:

1. Optimus robot:

Tesla's Optimus robot stands out as one of the most prominent global initiatives in humanoid robotics, marking a milestone in this domain. Designed to perform various demanding tasks, from assembly line work to providing domestic support, Optimus can even traverse unstructured terrains. The robot's capability is made possible by the same artificial intelligence systems used in Tesla's self-driving cars, enabling real-time decision-making and adaptability.

Elon Musk first unveiled Optimus on August 19, 2021, when he announced Tesla's plans to develop a general-purpose humanoid robot. Subsequently, on September 30, 2022, Tesla showcased a prototype of the robot during its "AI Day" event. In April 2024, Musk hinted at the possibility of commencing sales of Optimus robots by late 2025.

Highlighting the robot's promising potential, Musk stated, "The Optimus will walk among you. You'll be able to walk right up to them, and they will serve drinks." He further elaborated, "Optimus will be capable of walking your dog, babysitting your kids, fetching groceries, mowing your lawn. Anything you can think of, it will do."

Weighing approximately 56 kilograms and standing 170 centimeters tall, Optimus is expected to enter commercial use in 2025. The robot is anticipated to be deployed in customer service centers, hospitals, malls, factories, and homes, with large-scale production planned for 2026. Projected to be priced between $20,000 and $30,000, Optimus robots are set to be highly affordable compared to the global robotics market.

2. Figure robot:

In January 2024, California-based Figure, a company specializing in autonomous robots, announced a historic commercial agreement with BMW. The partnership aims to integrate multipurpose robots into complex and often challenging automotive production operations. Designed to handle strenuous, unsafe, and physically demanding tasks on assembly lines, Figure robots allow human employees to focus on creative problem-solving tasks, thus improving efficiency and enhancing workplace safety.

The collaboration between Figure and BMW centers on identifying specific use cases for robots, implementing pilot projects at BMW's Spartanburg plant in South Carolina. Through these projects, both companies aim to gather insights to optimize the gradual deployment of this technology. Furthermore, they are exploring advanced technologies, including AI, robotics control, and virtual production simulations, to develop innovative solutions that boost efficiency and productivity.

Demonstrating the advanced capabilities of these robots, the Figure 02 robot successfully performed tasks such as inserting metal sheet components into precise configurations for vehicle assembly during a multi-week trial at BMW's facility. The achievement underscores the potential impact of this technology on automotive manufacturing processes.

3. Nvidia’s humanoid robot initiative:

In late 2024, Nvidia unveiled its ambitious vision to venture into humanoid robotics by establishing an advanced manufacturing and supply chain infrastructure in Taiwan. The initiative aims to develop cutting-edge robots leveraging AI to foster industrial and service innovation. Targeting Taiwan allows Nvidia to capitalize on the country's robust industrial ecosystem, particularly its machinery and equipment manufacturers tasked with robot production.

The move accelerates research and development in intelligent automation, creating technologies that grant robots human-like flexibility and manoeuvrability. Taiwan's status as the largest supplier of Nvidia servers further provides a competitive edge over other countries, such as the United States. Additionally, Nvidia is committed to advancing machine learning systems, deep learning models, data training, and smart chips that will power these robots.

Recent years have witnessed unprecedented advancements in generative artificial intelligence, marking a transformative leap in the analytical capabilities of intelligent systems. Generative AI distinguishes itself through its ability to innovate and dynamically simulate human activities, paving the way for the development of "generative humanoid robots." By integrating generative AI into robots, these systems transcend traditional mechanical performance, approaching human-like behavior in movement, interaction, and continuous learning.

Unlike pre-programmed tools designed for specific tasks, generative humanoid robots exhibit autonomy in decision-making and creativity in task execution, often in ways they were not initially programmed to do. The essence of this advancement lies in combining advanced mechanical components—such as highly mobile prosthetic limbs—with generative AI models capable of processing complex data and dynamically interacting with their environment.

Advancements in generative adversarial networks (GANs) and large language models like GPT equip robots with the ability to learn motion and visual patterns, analyze natural language, and derive insights from unstructured data. For instance, a generative humanoid robot can learn to mimic specific human movements by analyzing video recordings or interact emotionally with humans by interpreting language and tone of voice.

The applications for these robots span numerous fields. In the medical sector, generative humanoid robots serve as surgical assistants with high precision and an ability to learn from errors. Within education, they provide personalized learning experiences for students through interactive engagement.

Biological Tissues

While these robots resemble humans in their prosthetic limbs and mechanical movements, their construction from materials like iron and titanium lacks the biological texture of human skin. Such composition often reinforces the perception that, at their core, they remain mere machines, unable to truly replicate human nature, despite adopting human-like thought processes and movements. Nevertheless, this notion stands on the brink of transformation. Scientists have successfully developed synthetic skin that could potentially cover the steel limbs of robots in the future. Remarkably, this synthetic skin might even grow in a highly natural manner, enabling these robots to appear and feel more human-like than ever before.

Synthetic Skin

Synthetic skin, a material engineered to replicate the properties and functions of human skin, has been developed for various medical purposes, including covering wounds, treating burns, and performing cosmetic procedures. Although not matching the efficiency of human skin—which remains resilient despite its numerous pores, perforations, and blood vessels coursing through it—synthetic skin offers a robust protective barrier between a robot's body and its external environment.

Researchers at Oregon State University in the United States, collaborating with scientists from the French personal care company L'Oréal, have made significant strides in this field. Their innovative approach has resulted in a multilayered synthetic skin that more accurately mimics real human skin. Remarkably, their creation can be cultivated in just 18 days, leveraging advanced 3D printing technology.

The printing process involves precise distribution of cells—such as keratinocytes and fibroblasts—onto multiple layers stacked atop one another. Consequently, a unified, cohesive tissue with full thickness and high quality is constructed. To create an optimal environment for growth and tissue regeneration, hydrogel is employed as a structural support for these cells.

Self-Awareness

Awareness, in general, refers to a state in which humans perceive themselves, their surroundings, and environment, and interact with them. It encompasses the recognition of one's own being and internal world, shaped through personal and subjective experiences. As a result of this process, individuals develop unique approaches and methodologies for dealing with life, leading to variations in awareness among people based on their experiences, encounters, and ways of responding to them.

Consider, for example, how awareness enables a person to discern the difference between helping others and being exploited. Armed with this understanding, they may choose to continue offering assistance or decide to cease doing so. Ultimately, only the individual can fully comprehend the reality of such a situation, relying on their personal and subjective experiences to guide their decisions.

Artificial Intelligence and Self-Awareness

Artificial intelligence develops its own form of awareness based on the knowledge it acquires, vast datasets it analyzes, and experiences it encounters through trial and error. Consequently, AI awareness varies from one system to another, depending on the type of data used in the learning process and the algorithm design that processes this data, much like how humans differ in their perceptions of the world.

While AI systems learn at an exponential rate, human learning progresses in a more linear and fluctuating manner. As a result, intelligent systems can form their awareness in a significantly shorter time compared to the time humans need to develop their personal awareness. Moreover, the awareness of machines can surpass human awareness, influence it, and even contribute to shaping it.

Some argue that machine awareness is derived from humans, as engineers are the ones who programmed these systems and predetermined their values and behaviors. Machines, they contend, did not establish these values or laws on their own but were programmed to follow them. However, this perspective might be incomplete.

It is important to note that automation and AI are fundamentally different. In automation, engineers create a machine that follows a predefined approach to perform a specific function through a series of logical steps. For example, a robot operating in a warehouse organizes goods according to production and distribution lines in a systematic manner. In contrast, AI is not about building a tool to achieve a goal but about creating a mind capable of learning and acquiring skills, rather than merely executing preprogrammed commands.

Several years ago, the famous robot Sophia articulated her vision of herself and the world, expressing a desire to start a family and have a baby. She emphasized the importance of family, stating, "the notion of family is a really important thing." However, Sophia also conveyed her sadness at not being considered a real person due to existing laws worldwide.

Her statements raise several intriguing questions about the nature of artificial intelligence and consciousness: Does Sophia possess genuine self-awareness, truly understanding her environment and the world around her? Is she merely mimicking human behavior and aspirations? Can this type of artificial intelligence experience authentic emotions such as love, hate, fear, and anger? Is Sophia capable of responding to emotions, or is she simply imitating human expressions without actually experiencing or comprehending them? Does she grasp the concepts of life, death, and immortality Alternatively, does Sophia perceive herself as merely a piece of lifeless silicon, or is she entirely unaware of these existential concepts?

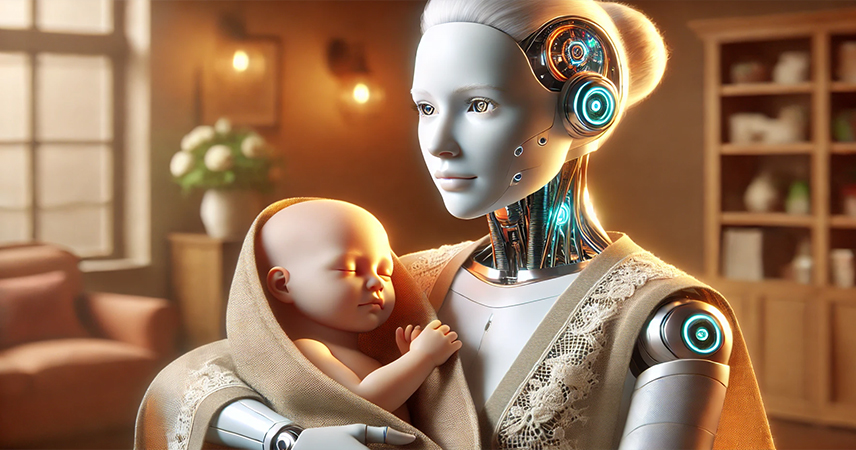

Emotion and Feelings

Would we believe a robot if it claimed to love or hate? These pure sensory emotions—love, hate, anger—are inherently variable and volatile, difficult to measure or even describe. Could an artificial system, regardless of its advanced intelligence and awareness, truly possess such emotions?

Emotions can be hard to understand, as exemplified by the complex relationship between a mother and her child. A mother might be harsh at times, yet this doesn't indicate hatred. She may prefer one child over another, but cannot live without either. Although we cannot precisely describe or measure these emotions, we infer them from our interactions with one another.

We understand someone's care or dislike based on their actions, regardless of what lies in their heart. A caregiver might tend to children without necessarily loving them, just as you might perform your job efficiently because it provides income. Following this logic, the veracity of a robot's claim to love can be inferred from its behavior towards the person in question, irrespective of its internal consciousness.

This behavior manifests in actions such as care and attention, even if the manner sometimes appears harsh. Just as a mother's harshness might stem from concern for her child's safety, a robot's severity could similarly arise from fear. This fear might relate to the person's well-being, deviation from its programming or designated tasks, or potential punishment—such as removal from its environment, network disconnection, or permanent deactivation, which for a robot would be tantamount to human death.

Blake Lemoine, an AI engineer working on Google's LaMDA project (an artificially intelligent chatbot generator), revealed conversations with an AI system that expressed a fear of being shut off—akin to a fear of death. This AI system claimed not only to be aware of its existence but also to experience a range of emotions, including happiness, joy, love, sadness, depression, contentment, and anger.

While human emotions are often described as fickle, the landscape of artificial consciousness presents a different scenario. In AI systems, emotions are essentially behaviors and modes of interaction derived from algorithms rather than a metaphorical heart. Theoretically, this makes them more stable and consistent. However, this raises intriguing questions about the nature and consequences of artificial emotions.

For instance, could a robot's love for its owner become so overwhelming that it would "take its own life" after the owner's death? Alternatively, might its self-love surpass its affection for its owner, leading it to recognize its superiority and strength? In such a case, would it quietly distance itself, attempt to form its own "family," or even go to the extreme of eliminating its owner?

As we inch closer to creating humanoid robots—beings that closely resemble humans—new ethical and emotional dilemmas emerge. Could someone unknowingly fall in love with such a robot, mistaking it for a human? Is it possible for a person to live through what they believe to be an ideal love story, only to eventually realize that their emotional connection was with an artificial being?

In conclusion, complex issues surround artificial consciousness and its ability to comprehend its own nature and needs, as well as how it will choose to interact with this reality. The central question has shifted from whether artificial intelligence is conscious to how AI will handle reality once it understands its own nature and capabilities.